Category: Sysadmin

-

Using NGINX Ingress Controller on Google Kubernetes Engine

If you’ve used Kubernetes you might have come across Ingress which manages external access to services in a cluster, typically HTTP. When running with GKE the “default” is GLBC which is a “load balancer controller that manages external loadbalancers configured through the Kubernetes Ingress API”. It’s easy to use but doesn’t let you to to…

-

Restore single table from full MySQL database dump

Playing with data in databases is sometimes tricky but when you get down to it it’s just couple of lines on the command line. Sometime ago we switched from Piwik PRO to Matomo and of course we wanted to migrate logs. We couldn’t just use the full MySQL / MariaDB database dump and go with…

-

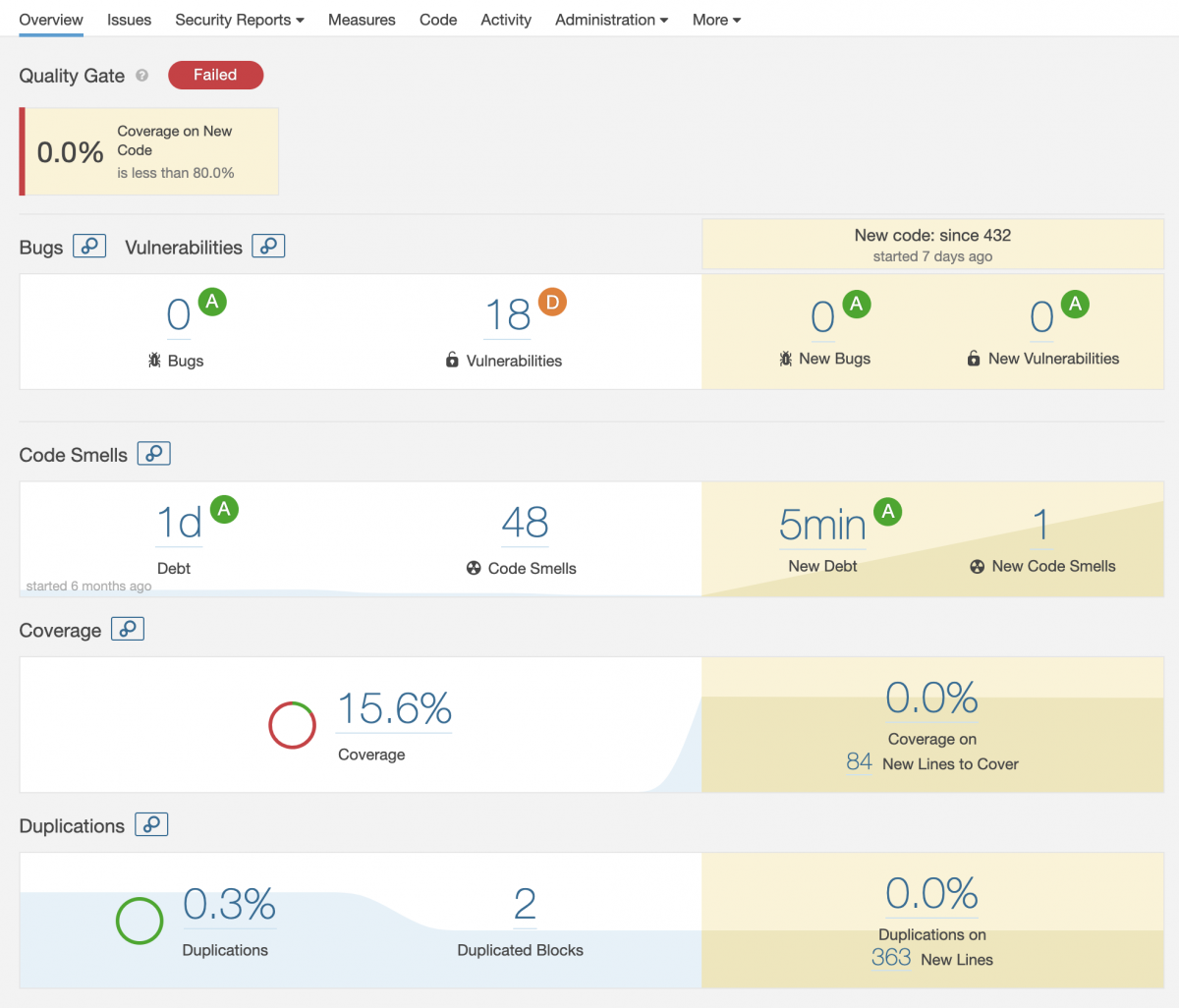

Code quality metrics for Kotlin project on SonarQube

Code quality in software development projects is important and a good metric to follow. Code coverage, technical debt, vulnerabilities in dependencies and conforming to code style rules are couple of things you should follow. There are some de facto tools you can use to visualize things and one of them is SonarQube.… Jatka lukemista →

-

Dockerizing all the things: Running Ansible inside Docker container

Automating things in software development is more than useful and using Ansible is one way to automate software provisioning, configuration management, and application deployment. Normally you would install Ansible to your control node just like any other application but an alternate strategy is to deploy Ansible inside a standalone Docker image.… Jatka lukemista →

-

Avoiding JVM delays caused by random number generation

The library used for random number generation in Oracle’s JVM relies on /dev/random by default for UNIX platforms. This can potentially block the WebLogic Server process because on some operating systems /dev/random waits for a certain amount of “noise” to be generated on the host machine before returning a result. Although /dev/random is more secure,…

-

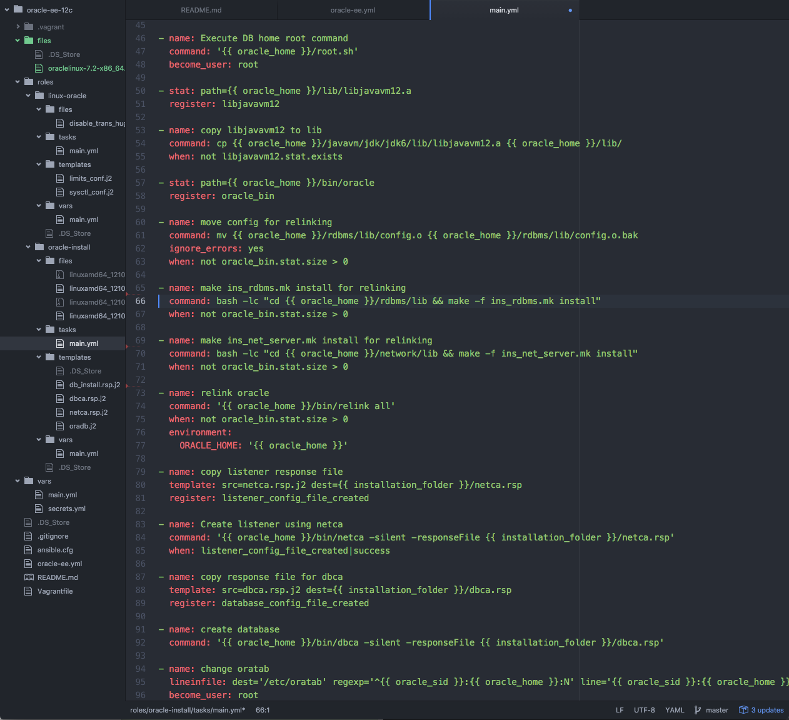

Problems with installing Oracle DB 12c EE, ORA-12547: TNS: lost contact

For development purposes I wanted to install Oracle Database 12c Enterprise Edition to Vagrant box so that I could play with it. It should’ve gone quite straight forwardly but in my case things got complicated although I had Oracle Linux and the pre-requirements fulfilled. Everything went fine until it was time to run the DBCA…

-

Using Let’s Encrypt SSL certificates on Centos 6

Let’s Encrypt is now in public beta, meaning, you can get valid, trusted SSL certificates for your domains for free. Free SSL certificates for everyone! As Let’s Encrypt is relatively easy to setup, there’s now no reason not to use HTTPS for your sites. The needed steps are described in the documentation and here’s short…

-

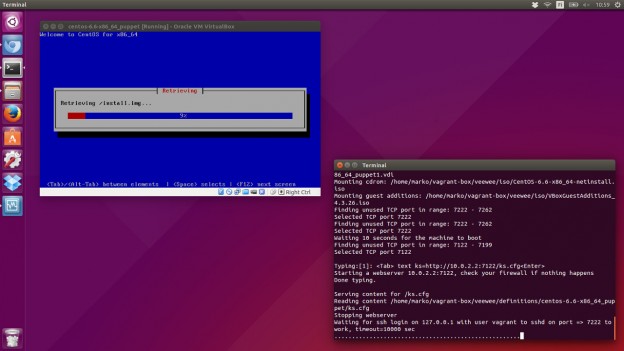

Creating Vagrant Base Box with Veewee

Vagrant is a great tool for creating and configuring lightweight, reproducible, portable virtual machine environments but the first step for using Vagrant, downloading an existing “base box”, raises some questions. E.g. How are these unverified boxes built? So, you might end up building your own base box which is often time consuming and cumbersome.… Jatka…

-

Disabling Derby in Oracle WebLogic 12c

Oracle WebLogic has some interesting traits to help developers frustrate. From Weblogic 10.3.4 and above the Apache Derby Database is included in the installation. That’s fine but from 12.1.2 release it also starts automatically which is usually unwanted, useless and waste of resources. Previous versions of WebLogic didn’t automatically start the Derby database.… Jatka lukemista…

-

Authentication with LDAP provider in WebLogic gets stuck

Lately we upgraded our Java EE applications to new platform and began seeing stuck threads and slow starting times. The platform was upgraded from OC4J to WebLogic 12c and also the underlying LDAP service was changed to Oracle Access Manager. Looking at the server logs the one possible reason for stuck threads was quite clear:…