Tag: notes

-

Notes from React Native EU 2022

React Native EU 2022 was held couple of weeks ago and it’s a conference which focuses exclusively on React Native but consists also on general topics which are universal in software development while applied to RN context. This year the online event provided great talks and especially there were many presentations about apps performance improvements,…

-

Notes from DEVOPS 2020 Online conference

DevOps 2020 Online was held 21.4. and 22.4.2020 and the first day talked about Cloud & Transformation and the second was 5G DevOps Seminar. Here are some quick notes from the talks I found the most interesting. The talk recordings are available from the conference site. DevOps 2020 How to improve your DevOps capability in…

-

Weekly notes 1

For some time I’ve been reading several newsletters to keep note what happens in the field of software development and the intention was also to share the interesting parts here. And now it’s time to move from intent to action. In the new “Weekly notes” series I share what interesting articles I have read with…

-

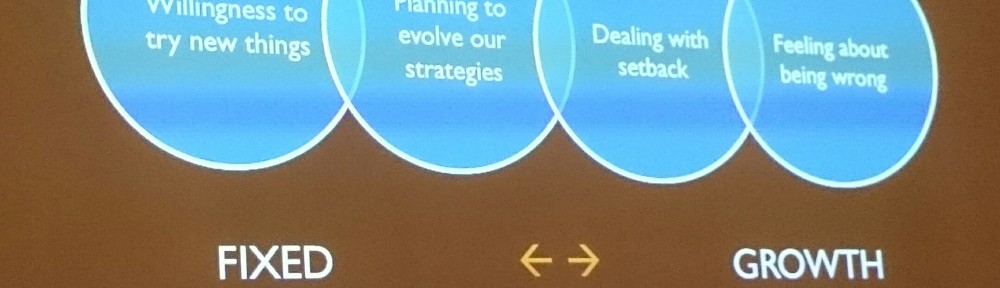

Notes from Tampere goes Agile 2015

What could be a better way to spend a beautiful Autumn Saturday than visiting Tampere goes Agile and being inspired beyong agile. Well, I can think couple of activities which beat waking up 5:30 to catch a train to Tampere but attending a conference and listening to thought provoking presentations is always refreshing.… Jatka lukemista…