Tag: security

-

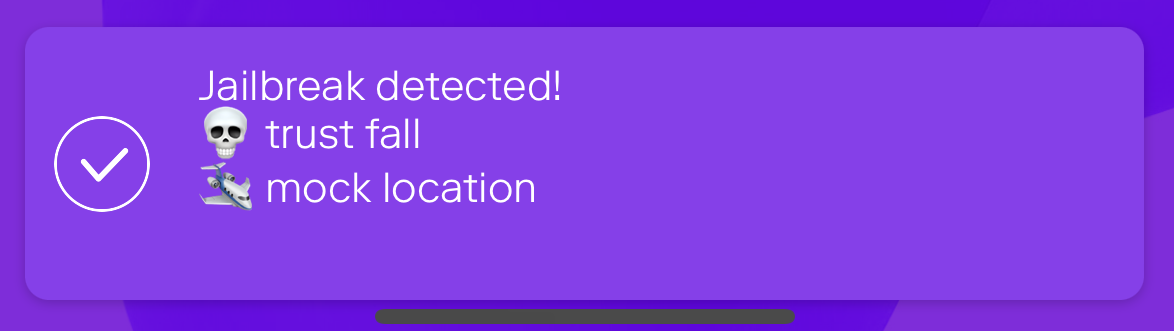

Jailbreak detection with jail-monkey on React Native app

Mobile device operating systems often impose certain restrictions to what capabilities the user have on the device like which apps can be installed on the device and what access to information and data apps and user have on the device. The limitations can be bypassed with jailbreaking or rooting the device which might introduce security…

-

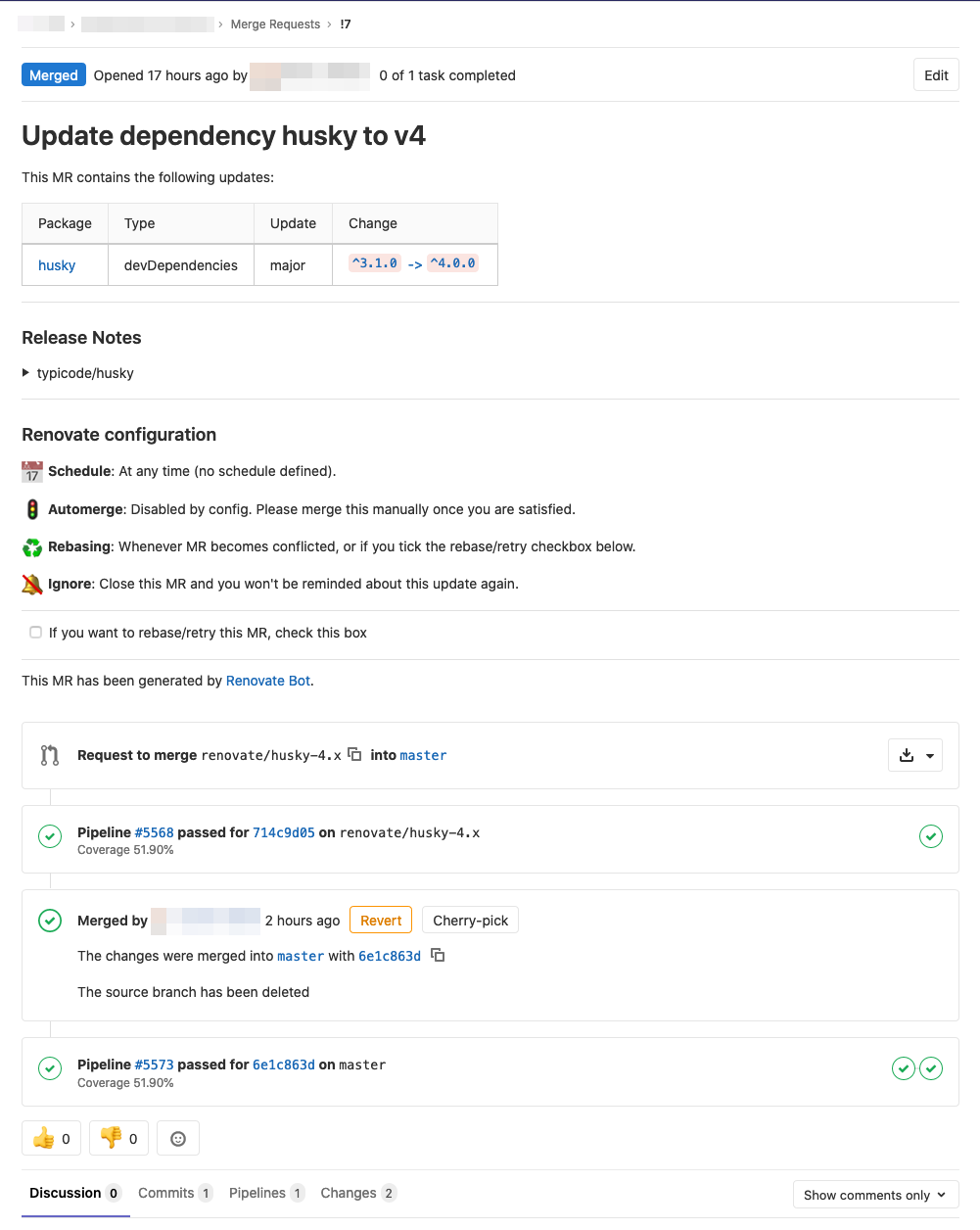

Automate your dependency management using update tool

Software often consists of not just your own code but also is dependent of third party libraries and other software which has their own update cycle and new versions are released now and then with fixes to vulnerabilities and with new features. Now the question is what is your dependency management strategy and how do…

-

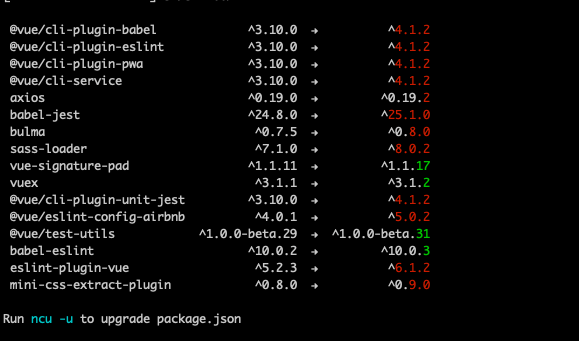

Tracking vulnerabilities and keeping Node.js packages up to date

Software evolves quickly and new versions of libraries are released but how do you keep track of updated dependencies and vulnerable libraries? Managing dependencies has always been somewhat a pain point but an important part of software development as it’s better to be tracking vulnerabilities and running fresh packages than being pwned.… Jatka lukemista →

-

Notes from security in the age of Docker & Kubernetes

Security is always the more obscure part of software development and while container runtimes provide good isolation from the host operating system when using Docker and running containers in Kubernetes, you should not assume to be free from exploits. Remember to use the best practices when you were not using containers.… Jatka lukemista →

-

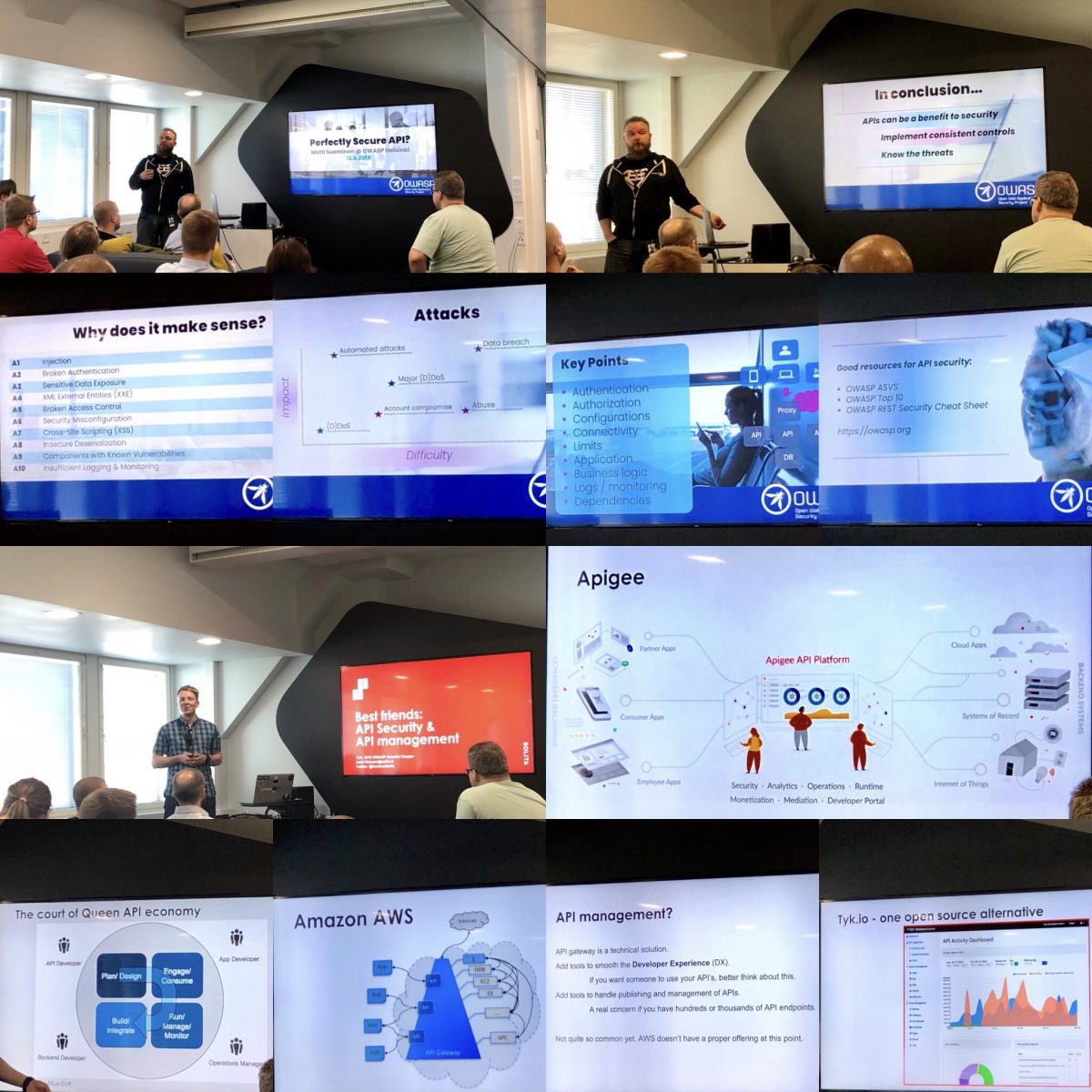

OWASP Helsinki chapter meeting 34: Secure API

OWASP Helsinki Chapter held a meeting number 34 last week at Eficode with topics of “Perfectly secure API” and “Best friends: API security & API management”. The event gave good overview to the topics covered and was quite packed with people. Eficode’s premises were modern and there was snacks and beverages.… Jatka lukemista →

-

Keeping data secured with iStorage datAshur Personal2 USB flash drive

Knowledge is power and keeping it secured from unauthorised eyes is important, be it inside of a computer, on external hard drive or on USB flash drive. Especially small external devices are easy to lose and can leave your data vulnerable if not encrypted. Fortunately there are solutions like iStorage datAshur Personal2 which is an…

-

Build secure Web applications by reading Iron-Clad Java

Building secure Web applications isn’t easy and contains many aspects that the development team has to consider and take into account. “Iron-Clad Java: Building Secure Web Applications” book is good starting point to learn concepts, tactics, patterns and anti-patterns to develop, deploy and maintain secure Java applications. With 304 pages the book is more about…