Category: Howto

-

Generating JWT and JWK for information exchange between services

Securely transmitting information between services and authorization can be achieved with using JSON Web Tokens. JWTs are an open, industry standard RFC 7519 method for representing claims securely between two parties. Here’s a short explanation and guide of what they are, their use and how to generate the needed things. “JSON Web Token (JWT) is…

-

Using NGINX Ingress Controller on Google Kubernetes Engine

If you’ve used Kubernetes you might have come across Ingress which manages external access to services in a cluster, typically HTTP. When running with GKE the “default” is GLBC which is a “load balancer controller that manages external loadbalancers configured through the Kubernetes Ingress API”. It’s easy to use but doesn’t let you to to…

-

Reset Hasura migrations and squash files

Using GraphQL for creating REST APIs is nowadays popular and there are different tools you can use. One of them is Hasura which is an open-source engine that gives you realtime GraphQL APIs on new or existing Postgres databases. Hasura is quite easy to work with but if your GraphQL schemas change a lot it creates…

-

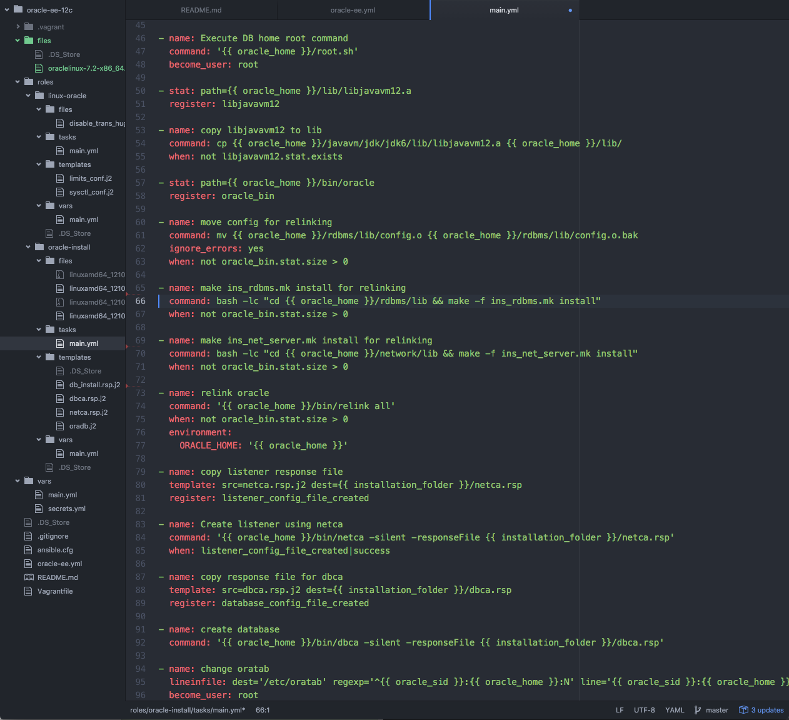

Problems with installing Oracle DB 12c EE, ORA-12547: TNS: lost contact

For development purposes I wanted to install Oracle Database 12c Enterprise Edition to Vagrant box so that I could play with it. It should’ve gone quite straight forwardly but in my case things got complicated although I had Oracle Linux and the pre-requirements fulfilled. Everything went fine until it was time to run the DBCA…

-

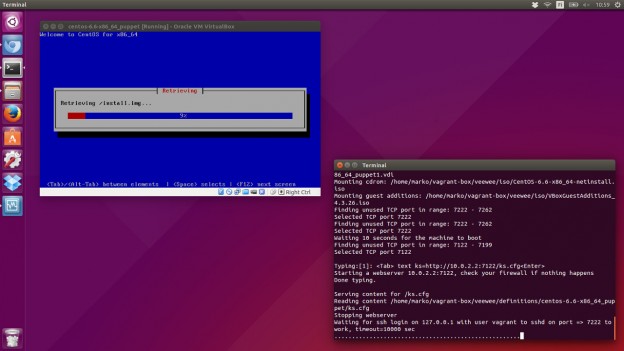

Creating Vagrant Base Box with Veewee

Vagrant is a great tool for creating and configuring lightweight, reproducible, portable virtual machine environments but the first step for using Vagrant, downloading an existing “base box”, raises some questions. E.g. How are these unverified boxes built? So, you might end up building your own base box which is often time consuming and cumbersome.… Jatka…

-

Disabling Derby in Oracle WebLogic 12c

Oracle WebLogic has some interesting traits to help developers frustrate. From Weblogic 10.3.4 and above the Apache Derby Database is included in the installation. That’s fine but from 12.1.2 release it also starts automatically which is usually unwanted, useless and waste of resources. Previous versions of WebLogic didn’t automatically start the Derby database.… Jatka lukemista…

-

Connecting Jabra HALO2 Bluetooth Headset with Windows 7

Recently I got Jabra HALO2 Bluetooth headset for teleconferences but had problems to get it work with Windows 7 and Dell Latitude E6530. Windows found the device and wanted to install drivers but couldn’t find any. The solution was easy: update your laptops’ Bluetooth drivers. I downloaded Dell Wireless 380 Bluetooth Application version 6.5.1.4000,A02 from…

-

Do a clean install of Windows 8 with an upgrade key

There are times when you have to do a clean install of your Windows 8 but if you have just an upgrade key you need to make couple of extra hoops before you can activate the new install. The upgrade key doesn’t prevent you installing to a clean disk but when you try to activate,…

-

Setting up LAMP stack on OS X

Setting up LAMP stack for web development on OS X can be done with 3rd party software like MAMP but as Mac OS X comes with pre-installed Apache and PHP it’s easy to use the native setup. You just need to configure Apache, PHP and install MySQL. Setup Apache2 Set up the Server Name to…

-

Web application test automation with Robot Framework

Software quality has always been important but seems that lately it has become more generally acknowledged fact that quality assurance and testing aren’t things to be left behind. With Java EE Web applications you have different ways to achieve test coverage and test that your application works with tools like JUnit, Mockito and DBUnit.… Jatka…