Tag: testing

-

Notes of Best Practices for writing Playwright tests

Playwright “enables reliable end-to-end testing for modern web apps” and it has good documentation also for Best Practices which helps you to make sure you are writing tests that are more resilient. If you’ve done automated end-to-end tests with Cypress or other tool you probably already know the basics of how to construct robust tests…

-

Automated End-to-End testing React Native apps with Detox

Everyone knows the importance of testing in software development projects so lets jump directly to the topic of how to use Detox for end-to-end testing React Native applications. It’s similar to how you would do end-to-end testing React applications with Cypress like I wrote previously. Testing React Native applications needs a bit more setup especially…

-

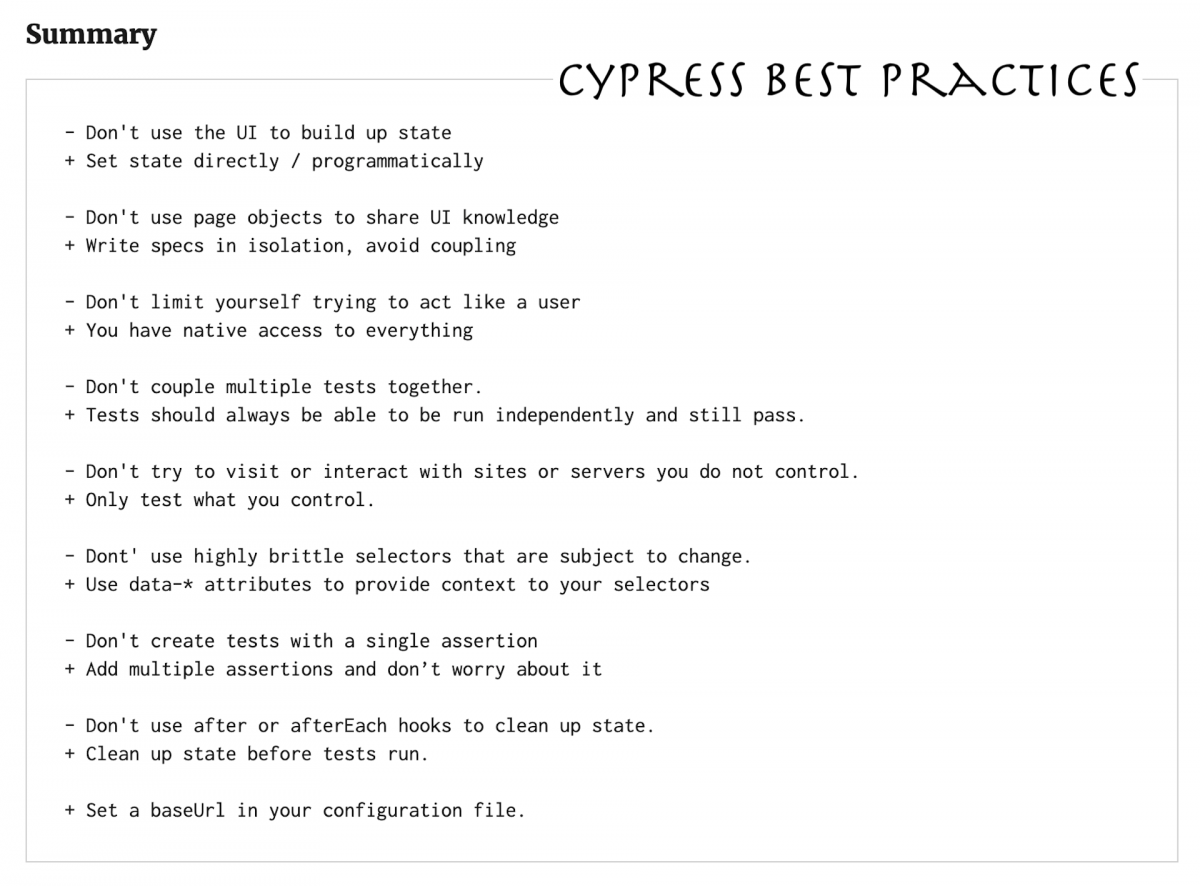

Notes of Best Practices for writing Cypress tests

Cypress is a nice tool for end-to-end tests and it has good documentation also for Best Practices including “Cypress Best Practices” talk by Brian Mann at Assert(JS) 2018. Here are my notes from the talk combined with the Cypress documentation. This article assumes you know and have Cypress running.… Jatka lukemista →

-

Web application test automation with Robot Framework

Software quality has always been important but seems that lately it has become more generally acknowledged fact that quality assurance and testing aren’t things to be left behind. With Java EE Web applications you have different ways to achieve test coverage and test that your application works with tools like JUnit, Mockito and DBUnit.… Jatka…