Tag: devops

-

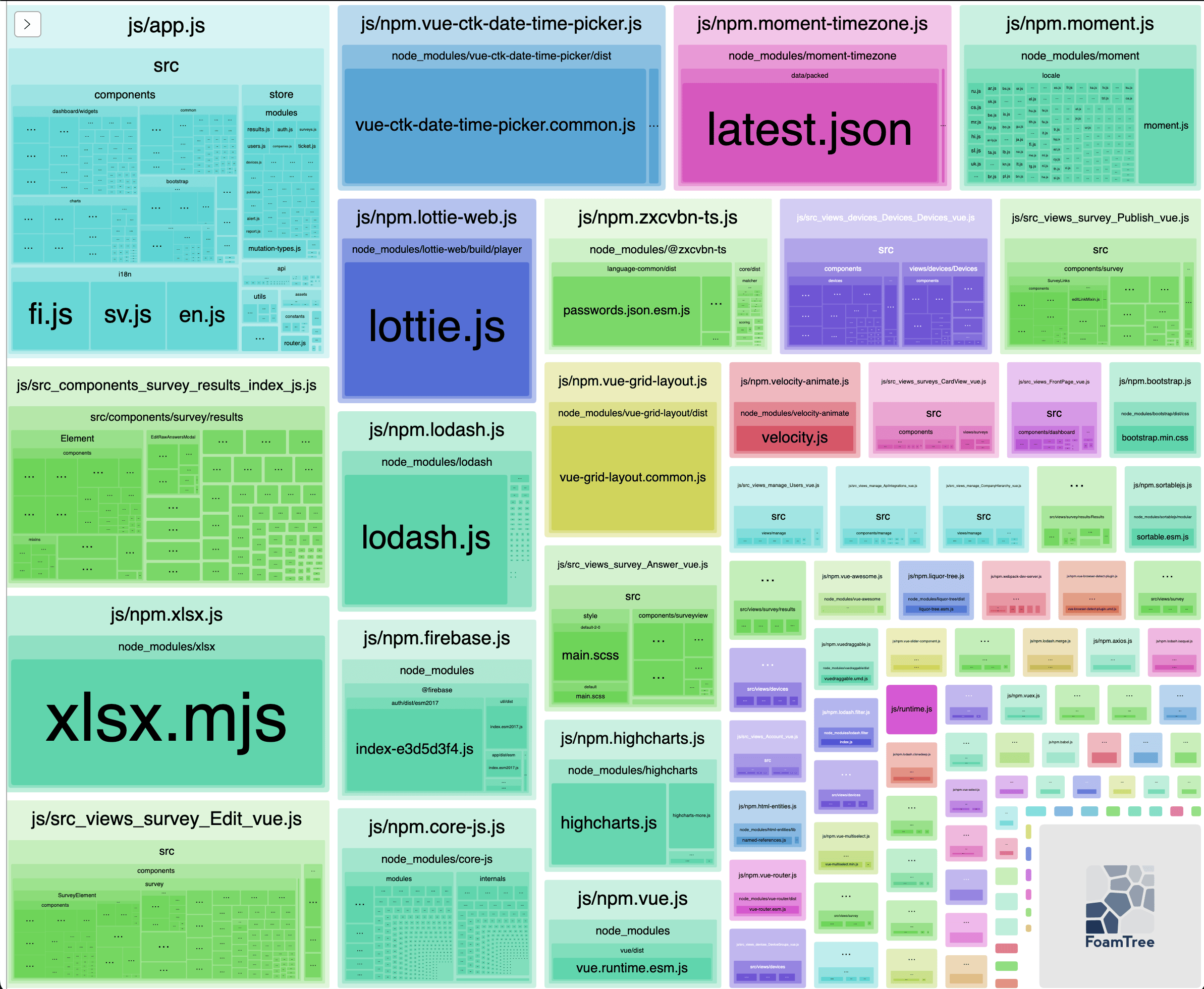

Analyzing Webpack bundles

Packaging your software can be done with different tools which bundles libraries and your code to groups of files (called chunks). Sometimes you might wonder why the chunks are so big and why some libraries are included although they are not used in some view (code splitting). There are different tools to analyze and visualize…

-

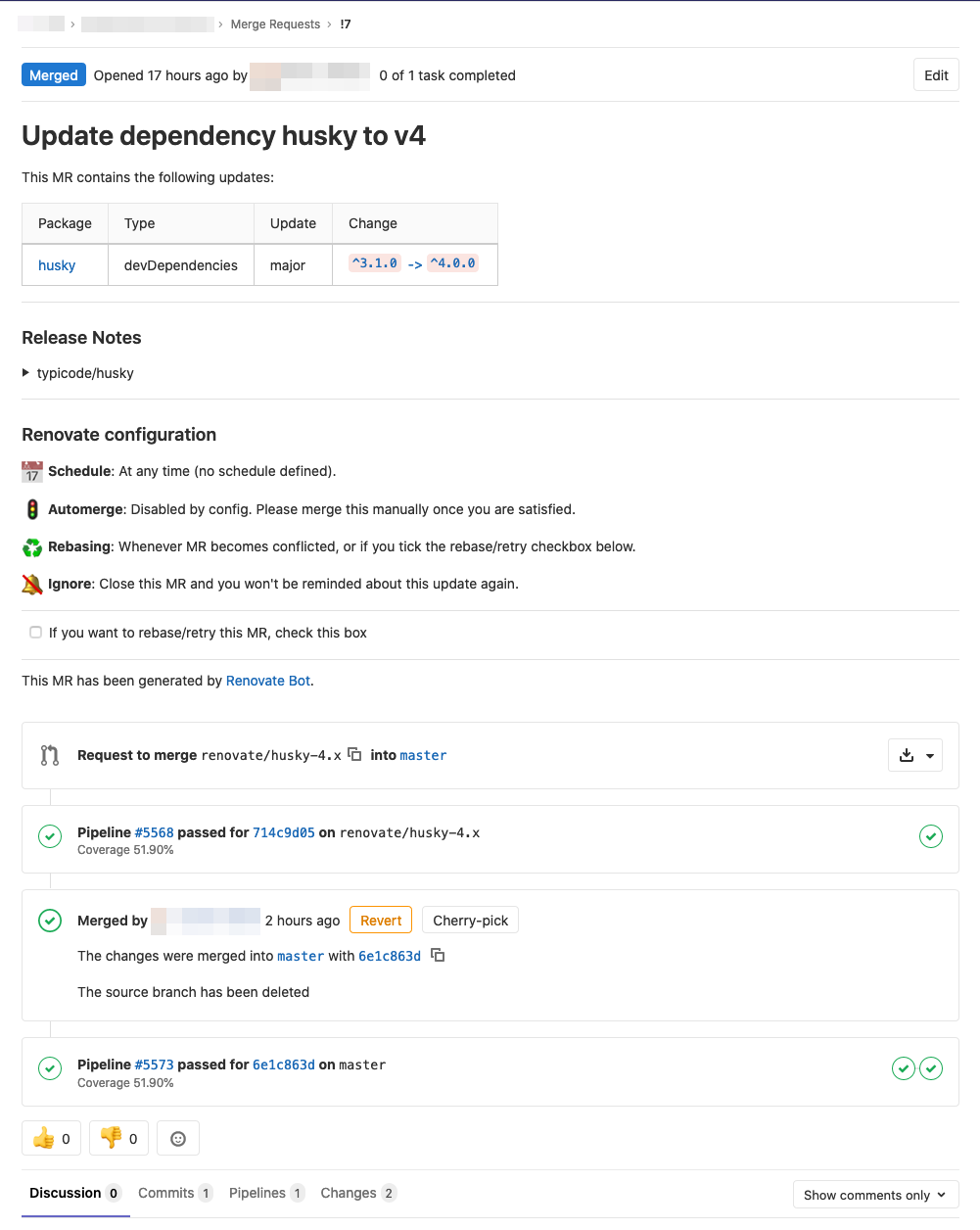

Automate your dependency management using update tool

Software often consists of not just your own code but also is dependent of third party libraries and other software which has their own update cycle and new versions are released now and then with fixes to vulnerabilities and with new features. Now the question is what is your dependency management strategy and how do…

-

Notes from DEVOPS 2020 Online conference

DevOps 2020 Online was held 21.4. and 22.4.2020 and the first day talked about Cloud & Transformation and the second was 5G DevOps Seminar. Here are some quick notes from the talks I found the most interesting. The talk recordings are available from the conference site. DevOps 2020 How to improve your DevOps capability in…

-

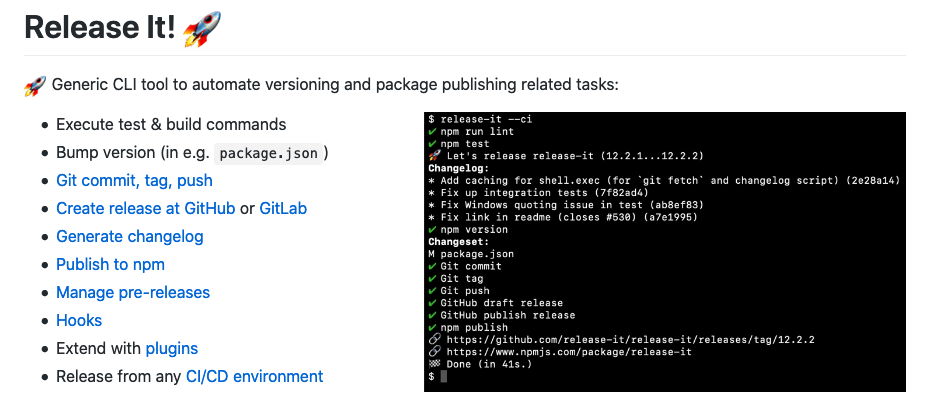

Automate versioning and changelog with release-it on GitLab CI/CD

It’s said that you should automate all the things and one of the things could be versioning your software. Incrementing the version number in your e.g. package.json is easy but it’s easier when you bundle it to your continuous integration and continuous deployment process. There are different tools you can use to achieve your needs…

-

Reset Hasura migrations and squash files

Using GraphQL for creating REST APIs is nowadays popular and there are different tools you can use. One of them is Hasura which is an open-source engine that gives you realtime GraphQL APIs on new or existing Postgres databases. Hasura is quite easy to work with but if your GraphQL schemas change a lot it creates…

-

Git pre-commit and pre-receive hooks: validating YAML

Software development has many steps which you can automate and one useful thing to automate is to add Git commit hooks to validate your commits to version control. Firing off custom client-side and server-side scripts when certain important actions occur. Validating commited files’ contents is important for syntax validity and even more when providing Spring…

-

Dockerizing all the things: Running Ansible inside Docker container

Automating things in software development is more than useful and using Ansible is one way to automate software provisioning, configuration management, and application deployment. Normally you would install Ansible to your control node just like any other application but an alternate strategy is to deploy Ansible inside a standalone Docker image.… Jatka lukemista →

-

Nebula Tech Thursday – Beer & DevOps 2.3.2017

Agile software development to the cloud can be nowadays seen more as a rule than exception and that’s also what this year’s first Nebula Tech Thursday’s topics were about. The event was held 2.3.2017 at Woolshed Bar & Kitchen alongside good food and beer. The event consisted of talks about “Building a Full Devops Pipeline…

-

DevOps Finland Meetup goes Mobile at Zalando

Development and operations, DevOps, is in my opinion essential for getting things done with timely manner and it’s always good to hear how others are doing it by attending meetups. This time DevOps Finland went Mobile and we heard nice presentations about continuous delivery for mobile applications, mobile testing with Appium and the Robot Framework…

-

Docker containers and using Alpine Linux for minimal base images

After using Docker for a while, you quickly realize that you spend a lot of time downloading or distributing images. This is not necessarily a bad thing for some but for others that scale their infrastructure are required to store a copy of every image that’s running on each Docker host.… Jatka lukemista →