-

First ever SaimaaSec event at LUT

The first ever SaimaaSec meetup was organized last week and had three presentations from the infosec and cybersecurity industry. The event was held at LUT University which also sponsored it. Here are my (very) short notes from the presentations. War stories from Incident Response – key takeaways Juho Jauhiainen talked about incident responses and war…

-

Monthly notes 57

Issue 57, 22.12.2022 Cloud Recap of AWS re:Invent 2022: An Honest Review“Properly assess whether all those announcements should mean anything to you; here’s the ultimate AWS re:Invent 2022 recap you were looking for.” (from CloudSecList) Web development State of Frontend in 2022“React is king, Svelte is gaining popularity, Typescript continues to make web development less…

-

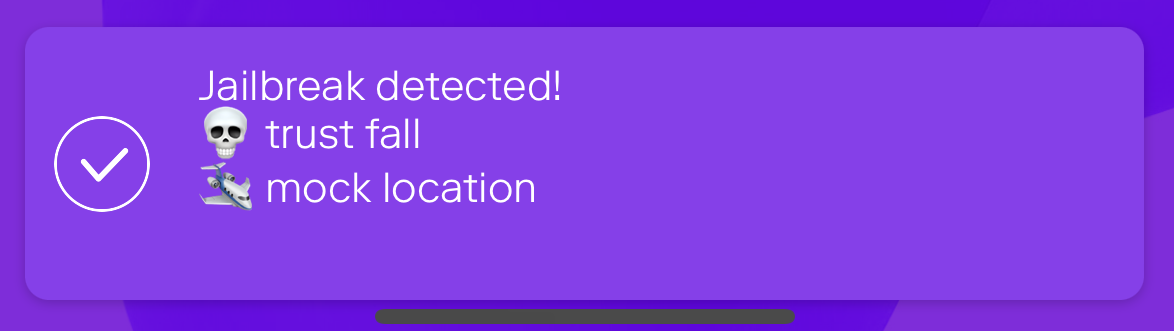

Jailbreak detection with jail-monkey on React Native app

Mobile device operating systems often impose certain restrictions to what capabilities the user have on the device like which apps can be installed on the device and what access to information and data apps and user have on the device. The limitations can be bypassed with jailbreaking or rooting the device which might introduce security…

-

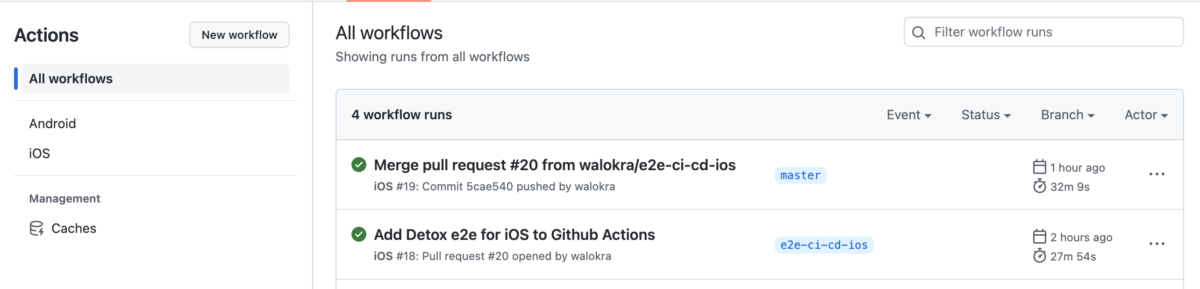

Running Detox end-to-end tests on CI

Now that you’ve added end-to-end tests with Detox to your React Native application from my previous blog post it’s time to automate running the tests and add them to continuous integration (CI) pipeline. Detox has documentation for running it on some Continuous Integration services but it’s somewhat lacking and outdated. This post will show how…

-

Automated End-to-End testing React Native apps with Detox

Everyone knows the importance of testing in software development projects so lets jump directly to the topic of how to use Detox for end-to-end testing React Native applications. It’s similar to how you would do end-to-end testing React applications with Cypress like I wrote previously. Testing React Native applications needs a bit more setup especially…

-

Notes from React Native EU 2022

React Native EU 2022 was held couple of weeks ago and it’s a conference which focuses exclusively on React Native but consists also on general topics which are universal in software development while applied to RN context. This year the online event provided great talks and especially there were many presentations about apps performance improvements,…

-

Raspberry Pi 3+ and Joy-IT 7″ touchscreen on Debian 11

I’ve had for sometime a Joy-IT 7″ IPS display for Raspberry Pi waiting in my drawer and now I got around to put it into use with Ikea Ribba frame. Setting up the touch screen was easy but getting it inverted (upside down) took some extra steps. The touchscreen is “RB-LCD-7-2″ from Joy-IT which is…

-

Short notes on tech 31/2022

Software development How to explain technical architecture with a natty little videoSome thoughts on explaining architecture through diagraming, in particular the advantage of scrappy videos to show diagramming step-by-step. (from DevOps Weekly) What Are Vanity Metrics and How to Stop Using ThemMeasurement and metrics are an important part of devops practices, but establishing metrics always…

-

Short notes on tech 25/2022

Tools CyberChefSimple, intuitive web app for analysing and decoding data without having to deal with complex tools or programming languages. CyberChef encourages both technical and non-technical people to explore data formats, encryption and compression. Software development The Art of Code CommentsSarah Drasner talked at JSConf Hawaii 2020 about how commenting code is a more nuanced…

-

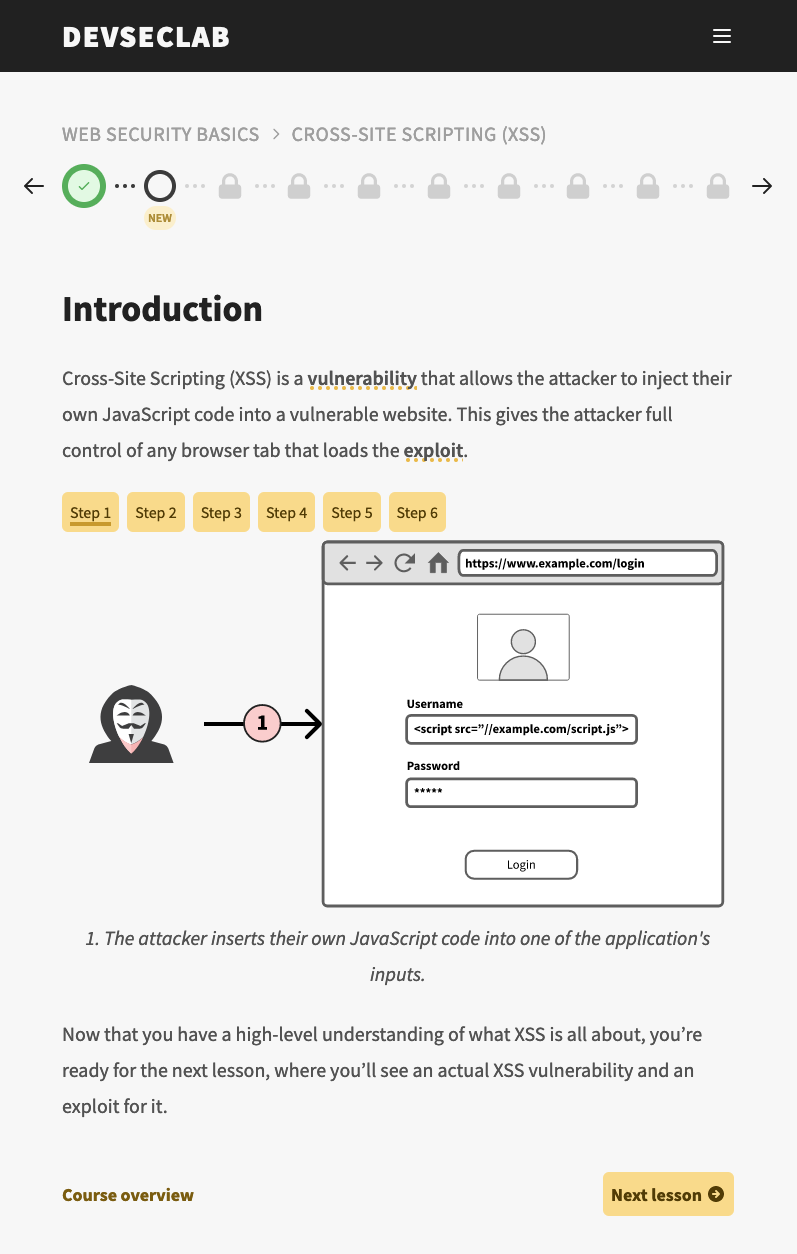

Learn to write secure code with DevSecLab

There are lots of pitfalls in software development and creating a secure Web application needs some thought and keeping especially the OWASP Top-10 in mind. One effective way to learn secure software development is to learn by doing and that’s what DevSecLab by Fraktal provides: teach developers to write secure code with hands-on exercises.… Jatka…